Unlocking LLMs on My Local Machine

My gaming laptop’s screen remained black as I tried to troubleshoot it. After resetting it several times and searching for answers through the web, I suspected that it was a hardware issue. At Best Buy, the folks at Geek Squad confirmed my suspicions and offered to help repair at a price point that can very well buy half a new gaming laptop.

As such, I decided to purchase a new gaming laptop and was excited about the NVIDIA RTX 4070 that came with it. After reinstalling all my applications, I realized that I could start using open-source LLMs with this new entry level hardware.

An Oobabooga Experience

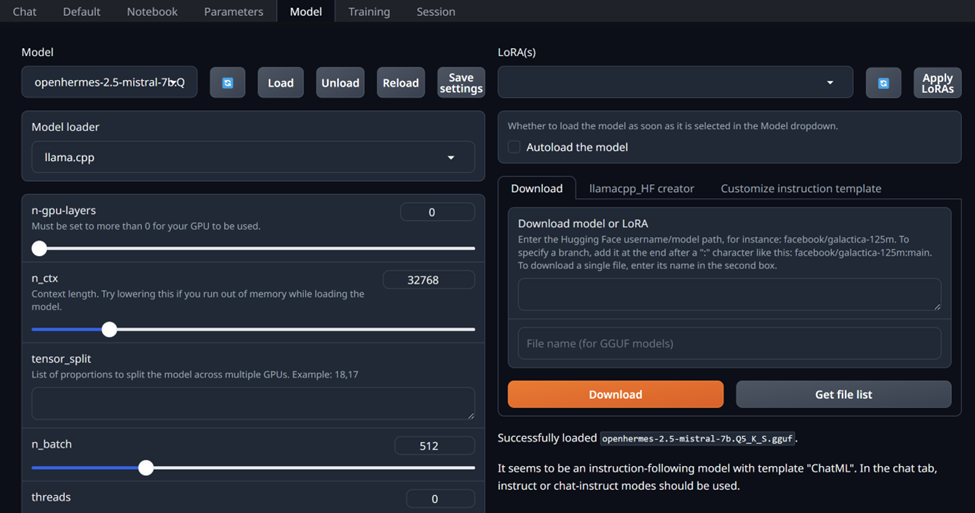

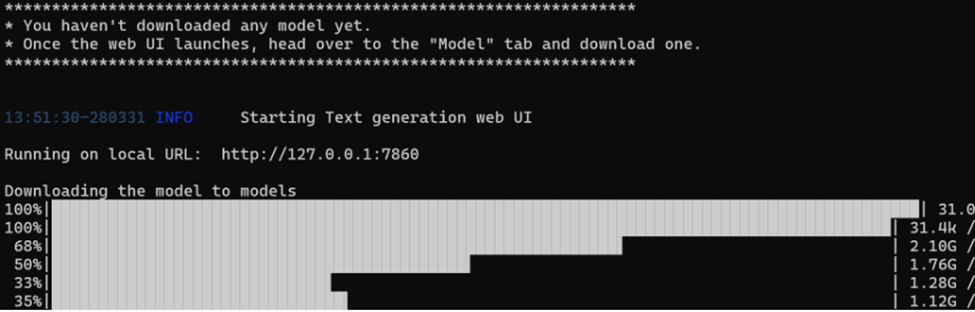

Understanding that Hugging Face has a leaderboard of popular open source LLMs, I researched a variety of available front ends to help me interact with the open-source models. One highly referenced front end is oobabooga (or Text Generation Web UI), a Gradio web UI for LLMs. Following the instructions from the repository, I quickly got the interface started on my local machine.

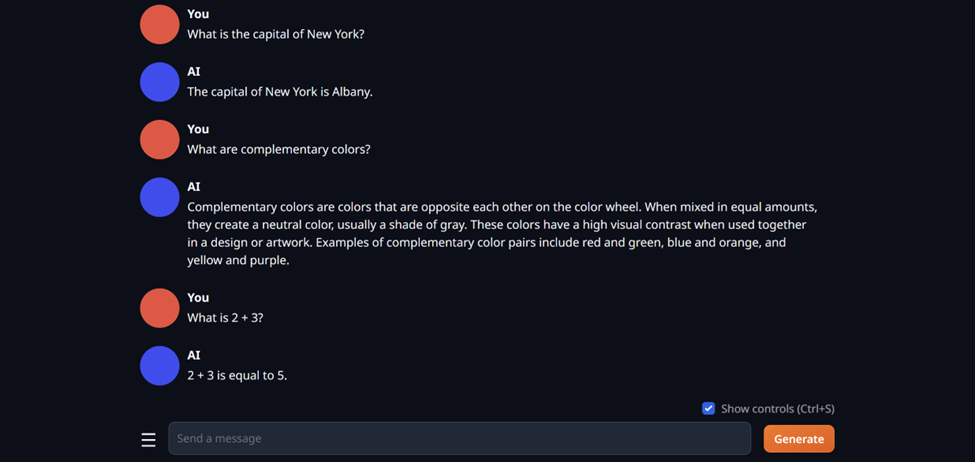

Downloading models was also quite easy to do as I had to copy the model’s name from Hugging Face, head to the “Model” tab, and press “Download”. After loading the model, I was able to start asking questions to test out the model. The output was generated at a rate of 0.40 – 1.87 tokens/second, which is very slow compared to something like Gemini’s output of 50 tokens/second.

Noticing that Text Generation Web UI also have a healthy list of extensions, including those for voice generation, image generation, addition of short and long term memories, and web search, I can’t wait to implement a local version of a document summarizer onto my local machine.

Appreciating Open-Source

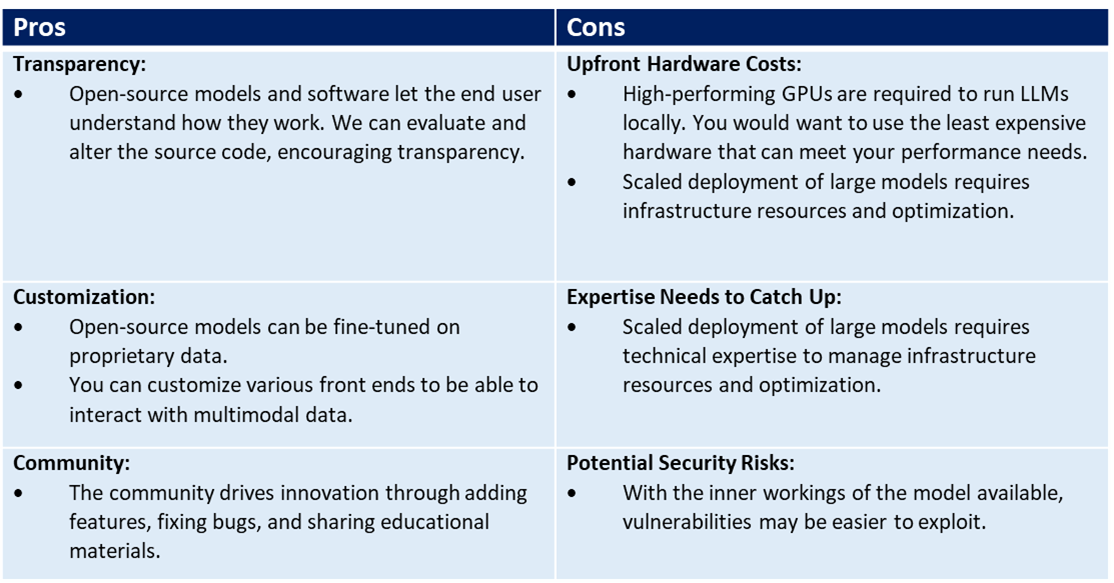

With the ability to use my own local LLM, I dove into the world of open-source models and implementations and summarized the pros and cons:

I’m excited about the potential that open-source projects provide to the world and the solutions that can unlock their scalability.